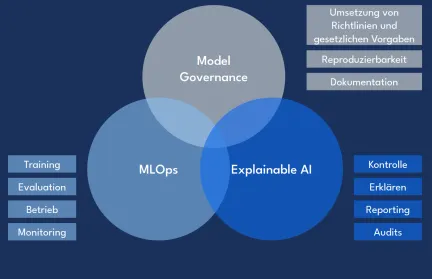

The capabilities of pre-trained Large Language Models (LLMs) open up new possibilities for the development of recommendation systems. However, data protection requirements, limited data availability, and last but not least the black-box nature of LLMs present hurdles for their use.

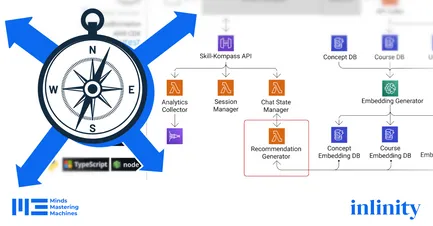

We show how Explainable AI and feedback mechanisms support self-determined use of the recommendation system. In addition, we present strategies for data protection-compliant and cost-effective operation of LLM-based solutions.